I’ll do an exercise to make a distance matrix, for that we only need the function spDistN1 from the library “sp”.

The basic use of the function is the following:

library(sp)

data(meuse)

coordinates(meuse) <- c("x", "y")

spDistsN1(meuse, meuse[1,], longlat=TRUE)

The limitation this is it only return the distance of the first element (or given row), because what the function does is to measure the distance of all the elements in relation with only one geometric element (meuse[1, ]) . To solve that we can use the apply function:

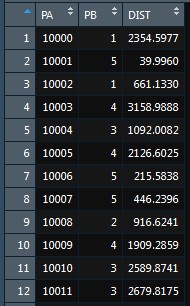

apply(meuse@coords, 1, function(x) spDistsN1(meuse@coords, x, longlat = T))

This is a great solution, but the issue is when the data is too big. In that case it will take too much time to process. (Imagine the case I do this with a for loop instead of apply, it would be much slower than now).

To solve the aforementioned problem I must to process with parallelization and as you can guess, the function parApply is just like apply but with parallelization development on it:

library(sp)

library(parallel)

m.coord <- meuse@coords

ncore <- detectCores()

cl <- makeCluster(ncore)

clusterExport(cl, c("m.coord"))

clusterEvalQ(cl = cl, expr = c(library(sp)))

parApply(cl = cl, X = m.coord, MARGIN = 1,

FUN = function(x) spDistsN1(m.coord, x, longlat = T))

stopCluster(cl)

Then we have a nice and an efficient solution.

Note: I know it can be easily done with a GIS software, but in this way it

can be incorporated in a bigger and automatized process.